AI assistants.

Rapid MVP design in fast growing AI market

A scalable system of AI Assistants that simplifies complex workflows, evolves through real user behaviour, and delivers meaningful value beyond novelty.

100%

Stakeholder approval for direction and feature roadmap

2x

Faster design iterations enabled by Lovable prototypes

90%

Of test participants said features were helpful in their daily workflow

Challenge.

How could AI Assistants provide meaningfully support event planning, learning, and reporting within our platform. Focusing on task automation and user value, not just novelty, the project needed to balance long-term product thinking with rapid delivery to meet stakeholder expectations.

My role

Lead product designer

Success criteria

- AI Assistants support real user workflows

- Secure client engagement and stakeholder buy-in

- Fast track to implementation through rapid prototyping

- Built on a scalable AI system for future expansion

Solution.

Through rapid prototyping and deep research, we designed four distinct AI Assistants:

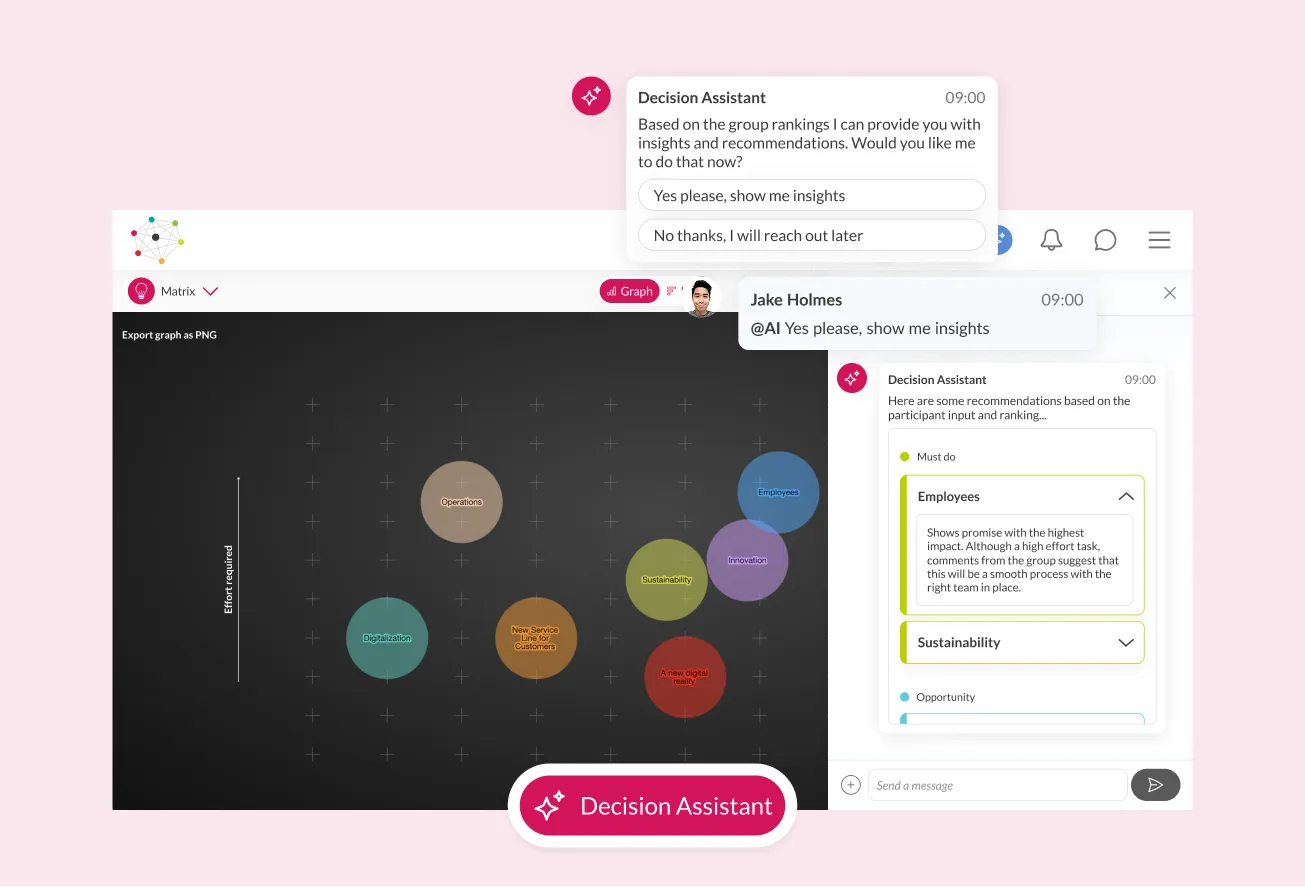

- Decision Assistant for synthesising participant inputs and surfacing insights

- Learning Assistant to recommend content and assist onboarding

- Production Assistant to streamline event setup for producers

- Reporting Assistant to deliver engagement analytics and performance metrics

User testing later revealed friction in switching between agents. Based on this, we merged the Production and Reporting Agents into a single, streamlined Admin Assistant, simplifying workflows and improving clarity.

Project delivery.

Discovery & concept validation.

Discussions with producers and internal teams revealed pain points around access to data, setup, and user guidance. I translated these into early mockups and animated demos to illustrate agent concepts, which we shared in client presentations to spark excitement and collect initial feedback.

Project Highlight

Advocated for a user-centred AI strategy while delivering rapid prototypes and messaging to secure funding and stakeholder alignment.

Competitor research.

Following client buy-in, we conducted deeper research into emerging AI usage patterns across event apps, learning platforms, and general chatbots. This allowed us to identify common UX approaches, gaps in the market, and opportunities to create a more intuitive, workflow-driven experience.

Project Highlight

Guided junior designers through real-world research activities, building their confidence in planning, facilitation, and user-centred synthesis.

Prompt sourcing.

To ground the agents in real workflows, I led a phased research process. First sourcing user prompts through surveys, clustering them into task categories with super users, and consolidating them into a living Prompt Directory. From this, we curated a list of suggested prompts per agent, helping users discover capabilities without overwhelm.

Key leadership moment

I led the sourcing and categorisation of 150+ real user prompts, turning vague needs into clear agent roles. By prioritising based on value and feasibility, I helped the team build AI tools that solved real admin pain points.

What were we solving?

Key problems to solve

- How do we give producers quick access to settings and data through conversational UI

- How do we give participants quick access to content and recommendations

- How do we keep momentum within the current AI landscape

Who were we solving for?

Core user personas

- Participant: Looking for recommendations for content and guidance on what to do next

- Producers: Looking to speed up their workflow

Base chat UI.

We designed a scalable chat interface to support AI-driven workflows. Defining the layout, message types (text, links, outputs), and system states like “thinking” and “responding,”became the foundation for a modular design system built to handle evolving AI interactions.

Lovable prototypes.

We used Lovable to quickly test how prompts were surfaced, discovered, and triggered within the chat. The prototype used static responses to simulate real interactions allowing us to validate flow, structure, and user expectations with speed.

Sketching the next evolution.

We explored how more complex, component-based responses could surface within the chat, sketching flows for a dynamic workspace that could display AI-enhanced forms, tiles, and visual outputs like charts and graphs based on user prompts.

Project Highlight

Introduced Lovable as a new prototyping tool, enabling faster iteration and more realistic interaction testing, expanding beyond Figma’s limitations to validate full chat flows.

Design system expansion.

Using the Prompt Directory as a foundation, I defined scalable response patterns mapped to real workflows including chart, cards and forms. These components made a dynamic system for the AI to surface settings and content.

Future thinking.

While designing we always kept in mind what the future of the AI Agents would be and created concepts for viable ideas.

Testing & feedback.

Pivotal findings from testing.

User testing made it clear that people didn’t want to choose between assistants. This led to a major product decision to merge the Production and Data agents into a unified Admin Agent. Another issue found when testing the use of agents in a group activity, led to a shift from a group-chat model to a thread-based interaction. We prototyped and validated the new flow using Lovable.

Key leadership moment

When testing revealed users preferred a unified assistant, I led the strategic decision to merge the Production and Data agents into a single Admin Agent. This decision simplified the experience, aligning the product with real user expectations.

Collaboration with developers.

Worked closely with developers to align task categories and intents with AI training goals. We explored context-aware prompting for varied response types ensuring design and logic evolved in sync.

Next steps and future roadmap.

Development is now underway, using validated prototypes to build a flexible, scalable AI chat foundation. Agents are being trained on real task datasets, with post-launch iterations driven by live user behaviour and feedback.

Following the beta launch next quarter, we’ll analyse real usage patterns, expand prompt coverage based on high-frequency tasks, and refine interaction models as user familiarity grows.

Key Contributions

- Shaped a user-centred AI product vision aligned with business needs

- Led phased research, validation, and iterative design cycles

- Mentored junior designers across research, prototyping, and documentation

- Made strategic design pivots based on user testing and product impact

- Aligned UX, AI training, and development into a scalable, future-proof system

Key metrics

100%

Stakeholder approval for direction and feature roadmap

2x

Faster design iteration enabled by Lovable prototypes

90%

Of test participants said features were helpful in their daily workflow