AI agents for events.

Rapid MVP production in fast growing AI market

Key metrics

100%

Stakeholder approval for direction and feature roadmap

2x

Faster design iteration enabled by Lovable prototypes

90%

Of test participants said features were helpful in their daily workflow

Project summary

As the Lead Product Designer for this initiative, I helped shape and deliver a user-centred AI solution for a virtual event platform. Through rapid prototyping, deep research, and cross-functional collaboration, we designed a scalable system of AI agents that simplifies complex workflows, evolves through real user behaviour, and delivers meaningful value beyond novelty.

Challenge.

We set out to define how AI agents could enhance virtual event experiences in meaningful, task-driven ways—solving real pain points around event planning, learning, engagement, and reporting, all through intuitive and accessible interactions.

From the outset, I shaped the research and design vision to ensure the AI offering would deliver meaningful user value, not just novelty. Balancing this long-term goal with the need for rapid delivery was a key part of the project strategy.

We knew designing for AI would be iterative: the system would need to adapt and evolve through real-world use, continuous testing, and feedback.

My role

Lead product designer

Project duration

Ongoing (Initial Research, Prototyping & Beta Development)

Leadership Highlight

I led the end-to-end research and design phases, balancing fast stakeholder demands with a user-centred approach. I also mentored a junior product designer across research activities, prototyping, and design system documentation.

Solution.

We initially designed four AI agents:

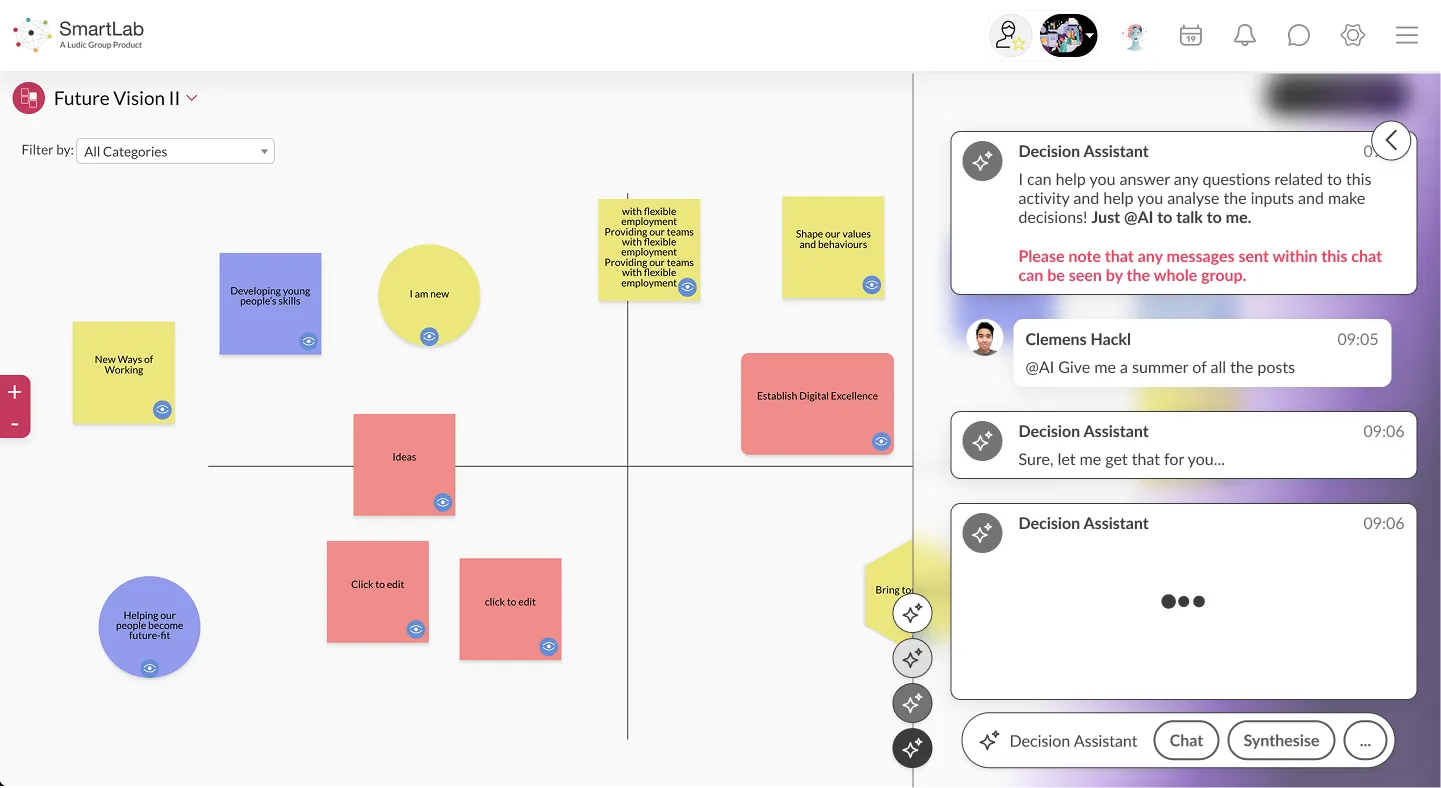

- Decision Agent: Analyzes participant inputs, synthesizes data, and generates visual report outputs.

- Learning Agent: Recommends platform content, relevant resources, and assists with user onboarding.

- Production Agent: Helps event producers quickly configure virtual event environments via prompts, reducing setup time and guiding new admins.

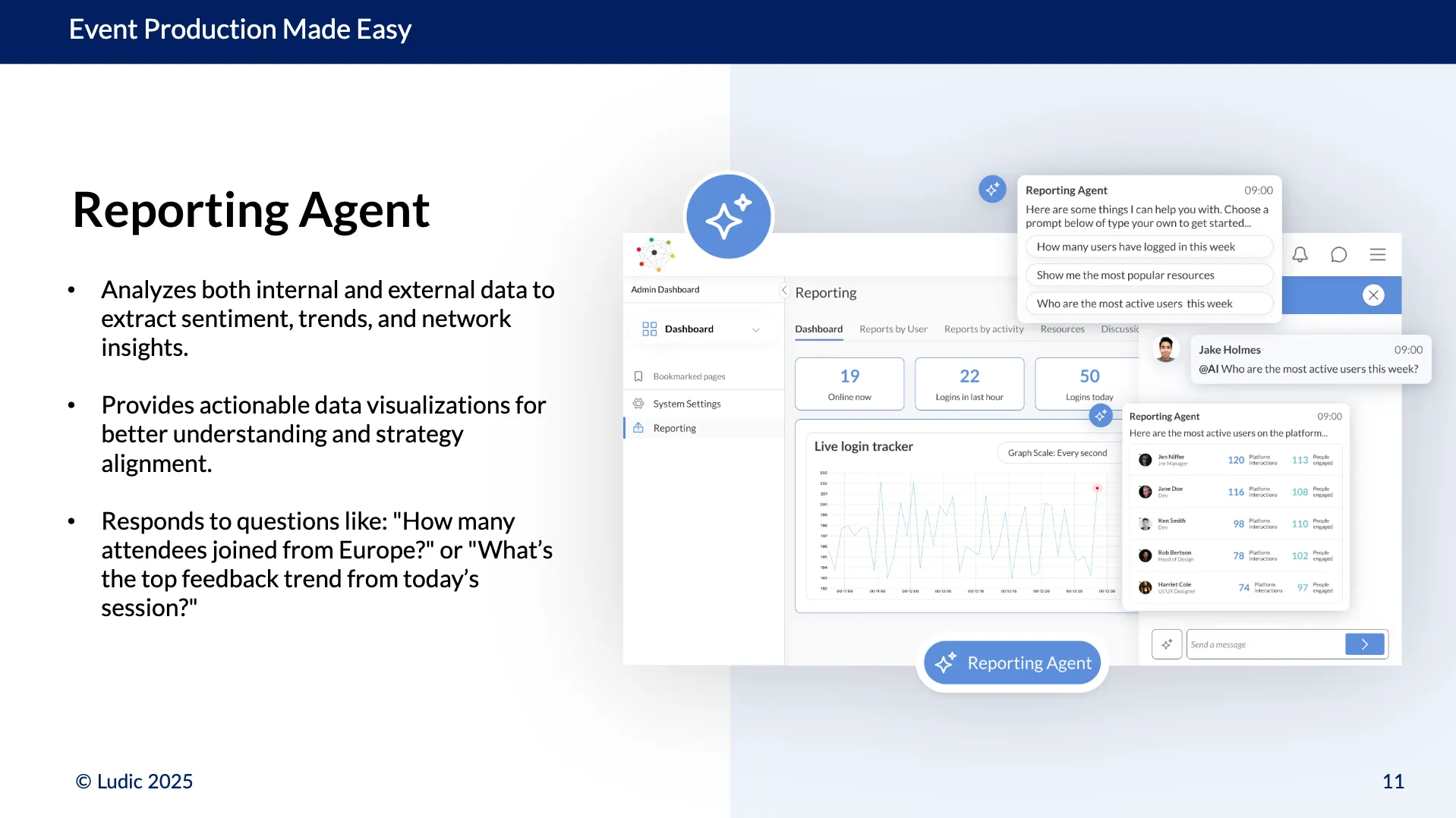

- Reporting Agent: Provides comprehensive reporting on engagement, global uptake, and key performance metrics.

Early teaser mockups, animated demos, and a communications pack helped secure client excitement and internal budget approval.

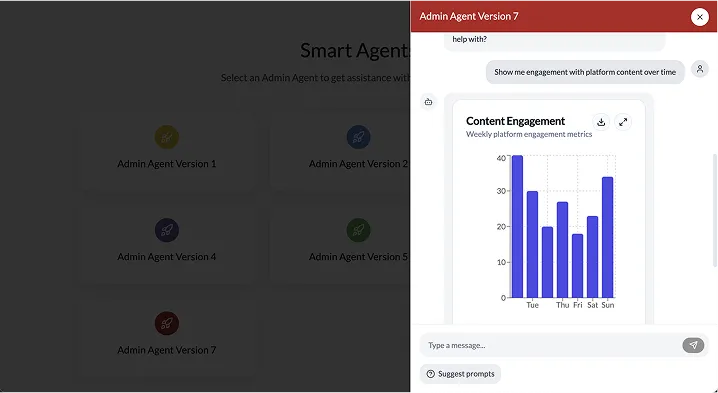

Later, based on user feedback, we evolved the strategy—merging the Production and Data agents into a unified Admin Agent for a more seamless experience.

Key Metrics

- Defined, validated AI use cases aligned with real user workflows

- Secured client engagement and stakeholder buy-in

- Balanced rapid prototyping with strategic research depth

- Made evidence-based design pivots to simplify the user experience

- Built a scalable AI design system for future expansion

Key leadership moment

When integrating AI agents into existing workflows, I co-led cross-functional sessions with stakeholders, engineers, and product managers to clarify agent capabilities and limitations. This ensured alignment on technical feasibility and user expectations, reducing scope creep and improving delivery predictability.

Research.

Stakeholder & user discussions

To understand the potential applications of AI in virtual events, I conducted discussions with event producers and internal teams. These conversations highlighted common challenges, such as inefficient data synthesis, cumbersome event setup processes, and the need for improved user engagement tools.

Early Concept Validation

To secure early buy-in, I created fast, research-informed mockups and animated teaser demos showcasing agent concepts.

Leadership Highlight

Advocated for a user-centred AI strategy while delivering rapid prototypes and messaging to secure funding and stakeholder alignment.

Client communications

Along with the animation, we made presentations to key clients to further drive excitement and provide documentation of our AI roadmap. This allowed for us to get feedback and insights early.

Competitor research

Following client buy-in, we conducted deeper research into emerging AI usage patterns across event apps, learning platforms, and general chatbots. This allowed us to identify common UX approaches, gaps in the market, and opportunities to create a more intuitive, workflow-driven experience.

Prompt Sourcing & User Research

I designed a phased research strategy to capture real user workflows and pain points:

- Prompt Sourcing Surveys – We asked users to imagine project scenarios and describe how an AI agent could assist them.

- Prompt Sorting Workshops – A small group of super users helped cluster prompts into actionable Task Categories.

- Prompt Directory – We consolidated all prompts, categories, and labeled intents into a living reference document to support both UX design and AI training.

- Suggested Prompt Catalogue – From the research, we curated a manageable list of suggested prompts per agent, making capabilities visible without overwhelming users.

Alongside driving the research plan, I mentored a junior product designer through planning and facilitating user interviews, card sorting workshops, and usability testing.

Leadership Highlight

Guided a junior designer through real-world research activities, building their confidence in planning, facilitation, and user-centred synthesis.

Key leadership moment

I led the sourcing and categorisation of 150+ real user prompts, turning vague needs into clear agent roles. By prioritising based on value and feasibility, I helped the team build AI tools that solved real admin pain points—making them practical, not performative.

Design.

Base Chat UI

We designed a scalable chat interface, including:

- Chat window and layout

- Message types: standard reply, reply with links, thinking state, and output state for visual/analytical data

This formed the foundation of a flexible design system for AI-driven workflows.

Sketching the next evolution

We began to think on how the more complex interactions would work. Exploring the possibility of a dynamic workspace for different web components to be surfaced based on the user's prompt including:

- Forms (enhanced by AI generated content)

- Tiles

- Graphs and charts

Lovable Prototypes

Using Lovable, we rapidly validated:

- Prompt categorisation and surfacing

- Suggested task discoverability

- Overall chat interaction experience

The prototype simulated workflows with static responses for fast, realistic feedback.

Leadership Highlight

Introduced Lovable as a new prototyping tool, enabling faster iteration and more realistic interaction testing—expanding beyond Figma’s limitations to validate full chat flows.

First prototype to test prompt categorisation and discoverability

Next iteration based on feedback to combine production and reporting prompts

Chosen iteration based on user testing and feedback

Prototyping of dynamic workspace for advanced interactions

Design System Expansion

Drawing from the Prompt Directory, I mapped out optimal response types—text, links, UI cards, or full-screen forms—based on user workflow needs.

Each pattern was validated through prototype testing.

I documented these patterns systematically for development handoff and guided the junior designer in contributing scalable system assets.

Leadership Highlight

Designed a flexible AI design system built for rapid evolution, while mentoring junior contributors on scalable design practices.

Future thinking

While designing we always kept in mind what the future of the AI Agents would be and created concepts for viable ideas.

Testing.

Testing surfaced a pivotal finding:

Users consistently preferred a single assistant for all admin and reporting tasks, rather than choosing between agents.

This led to a major product decision:

- Merging Production and Data agents into a single Admin Agent

- Change of group chat interactions to discussion thread

- Rapidly prototyping and validating new streamlined workflows

The prototype simulated workflows with static responses for fast, realistic feedback.

Leadership Highlight

Drove a major product experience pivot through evidence-based design leadership, simplifying AI interactions to match real user needs.

Adjustments made to to decision agent for group chat based on user feedback - for this we prototyped a more specific scenario where the agent would be used.

Key leadership moment

When testing revealed users preferred a unified assistant, I led the strategic decision to merge the Production and Data agents into a single Admin Agent. This decision simplified the experience, aligning the product with real user expectations and driving a significant pivot in our conversational AI design.

Development.

Collaboration with Development

Throughout, I collaborated closely with developers:

- Aligning task categories and intents to support AI training

- Brainstorming context-aware prompting based on user workflow states

- Delivering system documentation for diverse AI response types

The prototype simulated workflows with static responses for fast, realistic feedback.

Development & Future Outlook

Development is now underway, using the validated designs to build the flexible AI-driven chat foundation and train agents against real-world task datasets.Real user data will guide post-launch iterations, ensuring continuous relevance and value.

Next Steps & Roadmap

After beta launch next quarter, we will:

- Analyse real usage behaviour and workflows

- Expand prompt coverage based on task frequency

- Evolve interaction patterns to stay intuitive as user comfort grows

The AI agent system is designed for continuous learning, enabling ongoing refinement based on real-world engagement.

Key metrics

100%

Stakeholder approval for direction and feature roadmap

2x

Faster design iteration enabled by Lovable prototypes

90%

Of test participants said features were helpful in their daily workflow

Key Contributions

- Shaped a user-centred AI product vision aligned with business needs

- Led phased research, validation, and iterative design cycles

- Mentored junior designers across research, prototyping, and documentation

- Made strategic design pivots based on user testing and product impact

- Aligned UX, AI training, and development into a scalable, future-proof system